In 2035, it may not be your income, your debt, or your assets that determine your access to financial services. It may be your behavior.

While the phrase digital surveillance often conjures dystopian fears or vague corporate monitoring, the real-world mechanisms of behavioral data collection are already well embedded in modern banking, commerce, and governance. Now, they are converging into something more explicit: a model of financial access shaped not just by what you earn or spend, but by who you are, what you say, and how closely your choices align with accepted norms.

Recent leaks from financial policy groups suggest this isn’t theoretical — it’s procedural. Over the next decade, the integration of AI-driven behavioral profiling into financial infrastructure may give rise to a new system of conditional access — where every transaction, donation, or digital interaction contributes to a dynamic reputation score. One that determines how, and whether, you can participate in the economy at all.

This is not simply about privacy — it’s about power.

📜 From Credit Scores to Behavioral Profiling: A Timeline of Convergence

For most of the 20th century, financial credibility was measured in dollars and cents: income, debt-to-income ratio, and payment history. But over the past 25 years, the financial sector has undergone a quiet transformation. Institutions once focused purely on solvency are now looking at “social risk.”

The new currency is trustworthiness, measured through patterns of behavior derived from digital surveillance infrastructure.

Let’s trace the progression:

2001–2008: In the wake of 9/11, anti-terror finance laws like the Patriot Act expanded the responsibilities of banks to monitor suspicious behavior. The Know Your Customer (KYC) framework became mandatory. It began with crime prevention — but established a precedent: banks as moral gatekeepers.

2010s: Big Tech began accumulating behavioral data at unprecedented scale — search histories, purchases, content consumption, even dwell time on specific posts. Data brokers expanded. Payment platforms and social media converged.

2015: China launched its prototype Social Credit System — a high-profile example of what a behavioral scoring model could look like when integrated across public and private institutions. Though often sensationalized in the West, its underlying logic — reward conformity, penalize deviation — is mirrored in Western policy shifts.

2020–2023: During the COVID-19 pandemic, financial penalties and service restrictions were increasingly tied to behaviors deemed socially irresponsible or politically disruptive — from misinformation to protest participation. Emergency powers in Canada were used to freeze protester bank accounts with no due process. Platforms like PayPal and GoFundMe suspended accounts based on ideological content, not illegal activity.

Today: Banks in the UK and EU are trialing climate-linked spending trackers and “carbon budgeting tools.” ESG (Environmental, Social, Governance) frameworks are increasingly embedded into financial decision-making, not just investment.

🧩 The World Economic Forum has published a detailed overview of how AI and ESG data are shaping the financial future — often with little public debate. (Read more at WEF)

Together, these developments reveal a systemic shift: from neutral banking to values-based financial governance — a system where access is shaped by alignment.

🧠 Digital Surveillance as Infrastructure, Not Exception

This transformation wouldn’t be possible without the digital surveillance architecture already in place. And that architecture has grown not through public mandate, but through convenience.

Your smartphone, fitness tracker, search history, and online purchases create a continuous stream of metadata — ostensibly anonymized, but often trivially re-identifiable. Each app asks for permissions. Each term of service hides a contract.

While privacy concerns are often framed as personal — whether you “have something to hide” — the real issue is structural. Your data is not just being observed — it is being interpreted. Scored. Categorized. Shared. Combined.

And increasingly, it is being used to train the very models that will one day decide how trustworthy, risky, or valuable you are to a financial institution.

This isn’t the paranoia of conspiracy theory — it’s the logic of predictive analytics.

In the words of Dr. Shoshana Zuboff, author of The Age of Surveillance Capitalism:

“Surveillance capitalists claim our private experience for translation into behavioral data. Their systems are engineered not just to predict behavior, but to shape it.”

Financial institutions are beginning to adopt this logic. Risk is no longer just fiscal — it is reputational. And reputational data is behavioral data.

⚖️ A Model for “Ethical Finance” — or Digital Coercion?

Proponents of this shift argue that banks, like all responsible institutions, have a duty to prevent harm. Why, they ask, should someone flagged for spreading misinformation or participating in disruptive protests be treated as financially neutral?

Dr. Elaine Porter, a financial risk advisor who consulted on early behavioral finance models, described it this way:

“We already account for financial risk. Why shouldn’t we account for social risk — especially in a world where misinformation and polarization carry real-world consequences?”

From this view, digital surveillance becomes a moral instrument — ensuring that those who destabilize society are not rewarded with the same privileges as those who support it.

But critics argue this framework is dangerously circular. Who defines destabilizing behavior? Which sources count as legitimate? And how do we prevent legitimate dissent — or inconvenient truth-telling — from being swept into the same net as fraud or incitement?

In an open letter published by the Electronic Frontier Foundation, several legal scholars warned:

“When access to fundamental services like banking becomes contingent on ideological conformity, we have crossed from risk management into censorship-by-proxy.”

🧬 Psychological and Sociological Implications

The effects of such a system would not be confined to spreadsheets or approval queues. They would reshape behavior.

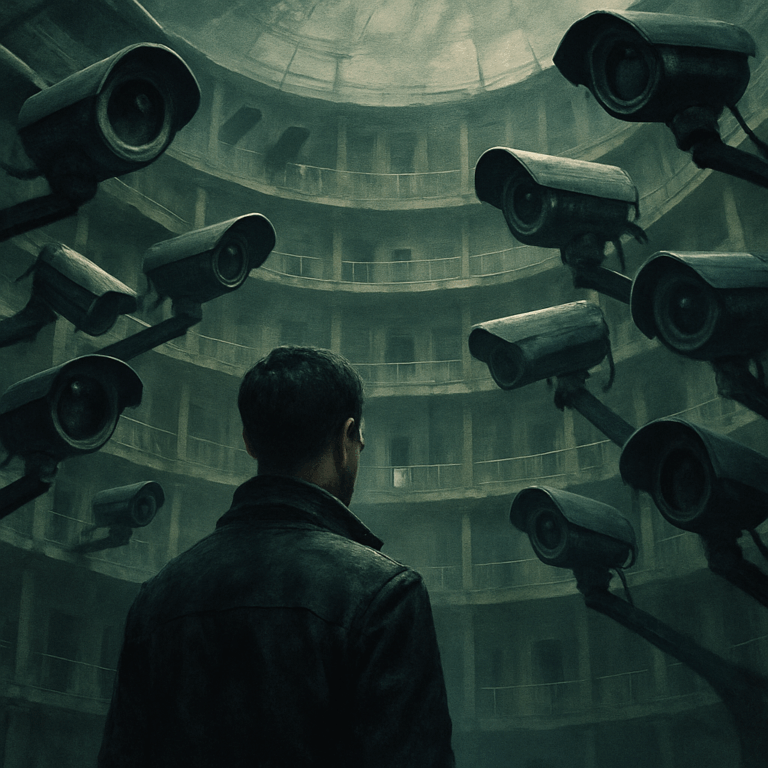

The mere awareness of being watched — particularly when tied to social consequences — alters how people express themselves. In psychology, this is known as the observer effect. In sociology, it echoes Foucault’s notion of the panopticon: a system of control where the possibility of surveillance leads individuals to police themselves.

Financial autonomy is among the final remaining spheres of independence. If digital surveillance begins to govern financial inclusion, then self-censorship is no longer a theoretical concern — it becomes economic self-preservation.

And this transformation won’t be even. Wealth insulates. Corporate entities and political allies often operate with exemptions or opaque backdoors. The public will not.

In the name of fairness, we may usher in a system where only the compliant — or the powerful — can afford to dissent.

🚫 From Conditional Access to Conditional Existence

The convergence of digital surveillance, AI risk modeling, and centralized financial control is not simply a future concern — it is an emerging present. Consider the infrastructure already in motion:

-

Central Bank Digital Currencies (CBDCs) being piloted globally, with programmable features and traceable transactions.

-

Biometric authentication becoming standard for banking apps, tying identity more tightly to digital footprints.

-

Carbon scoring tools integrated into consumer banking dashboards, subtly nudging spending behavior.

-

Political donations and affiliations already monitored in several fintech underwriting algorithms.

-

Cross-platform censorship where banking services are suspended in coordination with social media bans.

This isn’t speculative. It’s the predictable outcome of systems designed to reward conformity and punish deviation — even if that deviation is peaceful, evidence-based, or ethically grounded.

🧭 2035 and the Illusion of Consent

If current trends continue, by 2035, behavioral finance may become not just a parallel system, but the dominant one. This could mean:

-

Denial of credit based on non-financial behaviors.

-

Restricted travel or purchases based on dynamic risk scores.

-

Subscription-based access to essential services — contingent on ideological alignment.

-

Pre-emptive account holds or freezes triggered by predictive analytics.

The erosion of financial neutrality would mark a critical tipping point — one where the ability to exist independently of state-approved narratives becomes functionally impossible.

This is not simply about dystopia. It is about consent. At what point did society agree that ideological behavior should influence mortgage approval? At what hearing was it decided that online speech could determine food access?

There is no referendum for soft tyranny. Only drift — and normalization.

🛠️ The Rational Response: Resistance Through Redundancy

What options remain?

Critics and technologists alike are exploring alternatives — decentralized finance, privacy-preserving technologies, and legislative firewalls. But for these efforts to succeed, the public must be aware of the stakes.

This is no longer about convenience or privacy preferences. It is about autonomy. The right to think, speak, and live without algorithmic gatekeeping.

More importantly, it is about values. The phrase digital surveillance no longer describes a tool — it describes a worldview: that trust is conditional, behavior must be shaped, and freedom must be earned.

Resisting that worldview requires more than outrage. It requires infrastructure. Parallel systems. Transparent policy. A public willing to ask hard questions — and a media willing to publish the answers.

Because in the end, the real risk is not that this system fails.

It’s that it works — and people learn to live with it.